the developer philosopher

What is the “magic trick” to become conscious ?

Consciousness did not appear suddenly from a certain level of complexity in our brain but was the product of a long evolutionary process that shaped it. As a consequence, simply build more and more complex AI systems will not make it emerge miraculously, we first need to understand the underlying “magic trick”.

I’m fairly admirative to see more and more articles focusing on the near future rise of machine consciousness although scientists don’t yet know how a collection of electric signals in our brain leads to subjective experiences. Serendipity sometimes allow “sagacious” enough individuals to link together apparently innocuous facts in order to come to a valuable conclusion. However, it is not a scientific strategy to tackle a problem, it’s just like looking for the winning numbers at the lottery. In this article I would like to highlight that an answer to the problem of consciousness might be in sight and we should consider it seriously instead of waiting for consciousness to emerge from the increasing complexity of our algorithms.

Why consciousness cannot emerge from complexity

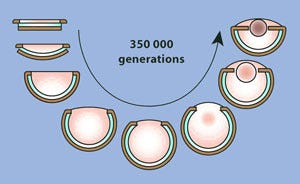

The evolution of eyes over a span of 350 000 generations

Most theories state that consciousness is caused by complexity, oscillations in neural activity or massive information integration but they point to a magic trick and do not provide any explanation. More specifically integration theories usually miss the fact that highly integrated computations occur outside of consciousness like moving or actions performed by the autonomic nervous system (ANS). Last but not least, consciousness cannot just be a feeling that emerges from information in the brain because we can report about it. Thus, it must act on the brain so that reportable information about itself is supplied.

According to the theory of evolution human eyes did not suddenly appear as full-fledged eyes, but evolved from simpler eyes, having fewer components, in ancestral species. Even unicellular organisms have tiny organelles to distinguish light from darkness. If every generation small mutations slightly changed one of the parts, step by step after numerous generations these changes can result in the existing human eyes. Something ethereal or indivisible, as consciousness is often depicted, cannot have come into existence through natural selection. First, if huge mutations (as required by emergence) do not occur frequently, a human eye has zero probability to suddenly appear due to its complexity, even with a large number of generations (not to mention it also necessitates even more complex brain functions). On the other hand, if a lot of huge mutations occur every generation, causing fatalities or permanent injuries within the specie is highly probable, and so its extinction. Even if the existing eyes could suddenly happen, it is unlikely the environment would be relevant: e.g. what could unicellular organisms do with it ?

Why consciousness can evolve from attention

The Attention Schema Theory (AST) suggests a new path to explain consciousness as a natural evolution process. Our brain needs to construct simplified models of reality because there is too much information to be fully processed. As a consequence it evolved to deeply process a few signals at the expense of others, in short signals compete and a winner emerges. This data-handling method is known as attention. Once your brain get attentive processing would it possibly be able to influence your behavior ? The answer is yes, a centralized control of attention can coordinate among all senses and point sensory apparatuses toward anything important, a.k.a. overt attention. The next evolutionary advance is the act to mentally shift your focus without moving sensory apparatuses, a.k.a. covert attention. In order to control that virtual movement, the brain needs an internal model (a sketch of it so to speak), and awareness is that model.

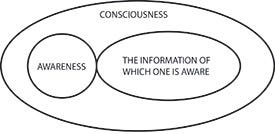

Consciousness encompasses both, the information about which you are aware and the act of experiencing it (i.e. awareness)

Although we say that we are aware of information, awareness actually is information computed by the brain. Just like the color is a computed representation of a real thing: light, awareness is a computed representation of a real thing: one’s own attention. If so, why awareness is always depicted as non-physical essence ? Simply because the brain does not need the details of the underlying circuits but only a mere approximation, a quick and efficient guide to behavior. To take another example, white as a color is a physical impossibility (because white light is a mixture of all colors), it’s an inaccurate model of the real world built by the brain. We probably also have the feeling that awareness is an emergent phenomenon because the underlying phenomenon (i.e. attention) is an emergent state information can enter in: it results from the winner selection process among signals.

Understanding and predicting another’s person behavior plays a key role in the survival of social species like homo sapiens. And among various criteria, the attentional state of a person is probably the most relevant one to predict its behavior. As a consequence, the same machinery used to model one’s own attention finally evolved to model another person’s attention based on computed information in the brain like gaze direction, body langage, previous meetings, etc. Consciousness is not something we or another person has but a property our brain attributes to ourselves or others. As such its mysterious nature as we usually report it can actually be explained by a cognitive bias. This is also probably why humans naturally tend to attribute consciousness to a lot of things like puppets (not to say those of ventriloquists), trees, etc.

The complete evolution process from attention to consciousness is summarized by the following figure.

Conclusion

Understanding a phenomenon is the first step toward engineering it, so if we have an explanation of consciousness we can hope to succeed in building the same functionality into our AIs. If the goal is to build an artificial algorithm that mimics humans then it needs to be “aware”, i.e. having an attention prediction model and apply it to itself and others. While “consciousness” or “self-awareness” are vague and amorphous things having no common base, it is easy to mix up apples and oranges when reading articles like this one. I would have been greatly surprised if the robot has reported by itself what has actually been written by the journalist, but that was not the case, it should therefore be put into perspective. More generally, the Turing Test mainly assesses social conventions, it doesn’t matter whether a computer is actually conscious or not, it matters only what people think about it.

I am not an AI expert, although I have a strong knowledge of what computers and algorithms are, and when I look at existing AI literature it seems to me that covert attention is not studied a lot (not to say modelling it), except maybe in the specific field of vision. This tells us two possible things. Either, researchers go the wrong way or miss the key point when trying to create conscious AIs. Or, what I tend to believe, AIs differ so largely from humans so that the concept is not relevant at all. AIs are designed, structured and perform in ways having nothing in common with how humans evolved and do under natural constraints. If one day an AI self-reports that it is conscious, the human signatures of consciousness are likely to be irrelevant to it. Should we just believe it or change our own views on consciousness ? In any case, it is very unlikely that artificial intelligence with no ability to explain its reasoning with human concepts will be socially acceptable. Equip it with human characteristics such as consciousness would probably be the only way for us to trust it and solve the black box problem, that is to say artificial consciousness out of necessity.

Note: the views expressed in this article are those of the author and do not necessarily reflect the views of the cited references.

source https://cryptocurrencyonline.co/the-next-step-towards-conscious-ai-should-be-awareness/

No comments:

Post a Comment